The Query Optimizer in SQL Server is an amazing piece of code. It takes any query we choose to throw at it, evaluates many different ways of executing that query and, generally speaking, comes up with an execution plan that is going to be good enough. And it does all that in milliseconds. Sometimes it gets bad press when it arrives at a sub-optimal plan, but seriously, could you do better, all of the time? I know I couldn’t!

But there are times when you can help the optimizer. It may be smarter than most of us, but you can coerce it. It does listen.

Take this AdventureWorks2014 query as an example

set statistics io on; go select BusinessEntityID , Title , FirstName , LastName from Person.Person where ModifiedDate < '1 jan 2006'

if we execute this with ‘Actual Execution Plan’ on , and look at the results, output and we get an empty result set

BusinessEntityID Title FirstName LastName ------------------------------------------------ (0 row(s) affected) Table 'Person'. Scan count 1, logical reads 3819, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

The output from set statistics io shows that we hit 3819 pages of table Person (which is the entire table by the way) and the execution plan shows us a Clustered Index Scan.

So what’s happened here? There is no index on ModifiedDate, meaning that predicate in the where clause isn’t something we can seek on, so the Query Optimizer has decided that we will have to read every row in Person.Person, via the Clustered Index (i.e the table) and check the value of ModifiedDate

There are no rows that match the query we wrote, but SQL has no idea there are no rows until it executes. Note the query cost of 2.84599

Let’s add something: a check constraint. Check constraints are used to limit the range of values that can be stored in a column or a set of columns. We know from the query above that no data matched the query, ie. no rows existed with a ModifiedDate earlier than 1 January 2006, so lets add a constraint that prevents us from ever putting data in that would match.

-- add a check constraint

if object_id('Person.ModifiedDateConstraint','C') is not null

alter table Person.Person drop constraint ModifiedDateConstraint;

go

alter table Person.Person with check

add constraint ModifiedDateConstraint check (ModifiedDate > '1 jan 2006');

Seems like a safe thing to do here as I’m not going to time-travel back 11 years and update this database!

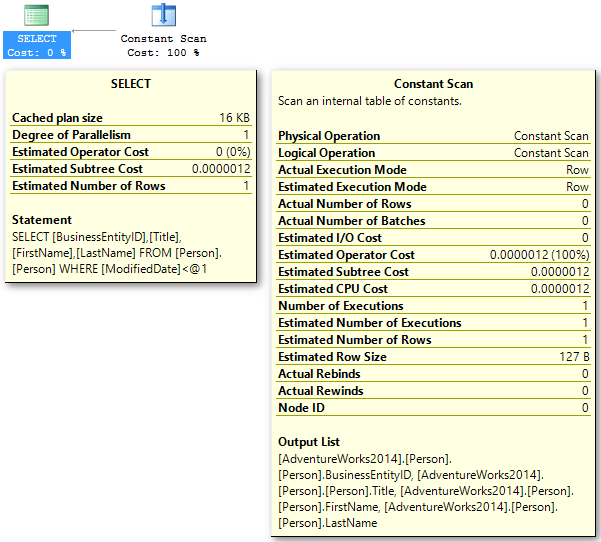

Now when I rerun the query, I get the same results (i.e. none), there is no output from set statistics io at all, and the execution plan is much different:

--rerun query

set statistics io on;

select

BusinessEntityID ,

Title ,

FirstName ,

LastName

from Person.Person

where ModifiedDate < '1 jan 2006'

BusinessEntityID Title FirstName LastName ------------------------------------------------ (0 row(s) affected)

There is just a Constant Scan, the constant being the value ‘1 jan 2006’ that I passed in to the query. All SQL Server had to do here was check the value of what I wanted to the known values of what is allowed in the column (via the check constraint). Since there could not be any rows that match, SQL doesn’t even have to access the data at all – hence the lack of set statistics io output. Query cost is now 0.0000012

--clean up

if object_id('Person.ModifiedDateConstraint','C') is not null

alter table Person.Person drop constraint ModifiedDateConstraint;

go

set statistics io off;

go

Round up

By adding this constraint, we’ve helped the optimizer make a better choice – and ok I agree this is a convoluted example – but it shows how the design of your schema and objects is important and can have serious performance implications.